All the messages below are just forwarded messages if some one feels hurt about it please add your comments we will remove the post.Host/author is not responsible for these posts

Friday, March 31, 2023

Websearch

Ranked Retrieval Evaluation - Rank Based Measures - Non- Binary relevance

Ranked Retrieval Evaluation - Rank Based Measures - Binary relevance

Wednesday, March 29, 2023

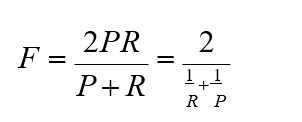

Unranked Retrieval Evaluation- Precision and Recall based on documents and F-Measure

TREC Benchmark / Measuring Relevance / Evaluating IR system

Tuesday, March 28, 2023

Anu's NLP Session on BITS WILP Pre Mid Semester Topic - 2 - DSECLZG525

Anu's NLP Session on BITS WILP Pre Mid Semester Topic - 1 - DSECLZG525

Thursday, March 9, 2023

INSTALLING HADOOP, HIVE IN WINDOWS & WORKING WITH HDFS -- Ramana (Along with recording)

Friday, March 3, 2023

Install Hive/Derby on top of Hadoop in Windows

Saturday, February 18, 2023

Makeup - Mid Semester- Questions and answers - Deep Learning - DSECLZG524 - Jan 2023

Midsemester - Makeup - SPA - Question Paper with answers - Jan 2023

Birla Institute of

Technology & Science, Pilani

Work Integrated

Learning Programmes Division

First Semester 2022-2023

Mid-Semester Test

(EC-2 Makeup – ANSWER KEY)

Course No. :

DSECL ZC556

Course Title :

Stream Processing and Analytics

Nature of Exam :

Open Book

|

No. of Pages = 4 No. of Questions = 5

|

Duration :

2 Hours

Date of Exam : 06/03/2021 or 19/03/2021 (FN/AN)

Note to Students:

1. Please follow all the Instructions

to Candidates given on the cover page of the answer book.

2. All parts of a question

should be answered consecutively. Each answer should start from a fresh

page.

3. Assumptions made if any,

should be stated clearly at the beginning of your answer.

Q1. Consider an online food

ordering and delivery platform which enables the customers to browse the nearby

serving restaurants any time, explore their menu options, order the food and

get it delivered at their doorsteps, also provide the ratings for the foods.

The platform also enables the restaurant owners to analyze the click-stream as

well as historical data related to their restaurant so that they can improvise

their decisions with respect to the promotional offers made on the platform. [1 +1 + 2 + 1 + 1 = 6]

a)

Identify

the different data sources involved in this scenario. Also label them as

internal or external.

b)

If

you have been asked to design the customer data stream in this scenario, how it

will look like?

c)

Give

two examples of exploratory data analysis that restaurant owners can perform

with this data? Give details.

d)

Recommend

any suitable machine learning (with adequate justification) that can help the

restaurant owners to improvise their decisions about marketing efforts.

e)

Which

type of system architecture will be useful in this scenario?

Answer:

a) Customer

profile data, Restaurant data, Orders database, Food delivery agents data

All of them are of type internal

to the system

b) It will

be mix of few customer + restaurant + order data

{“timestamp”, “cust_id”,

“rest_id”, “order_id”, “items”, “payment_mode”…..}

c) Some

examples -

Find out factors affecting the

ratings of the restaurant

Find out the relationship

between the items those are ordered together

d) Few

examples –

Clustering – find out customers

who are similar in terms of order, location etc

Regression – to predict the

customer rating for this order or restaurant or to determine when he will place

his next order or estimate the next order pricing

Classification – to predict

whether customer will place an order in next n days or not or identify the

customer to make an offer / discount

Recommendation – recommend the

menu items to the customers

e) Lambda –

historical data for prediction, real time data for ordering and tracking etc.

Q2.

Imagine that you are building a real time traffic routing system. Identify and

justify the communication mechanisms used while making data available to the

external world in the following use cases.

[6]

a)

People

driving around any city can use mobile application to get updates and be

re-routed based on up-to-the moment traffic conditions. The user will post the

interest for a particular route through mobile application and in turn the

system will send the updates about the traffic conditions on that route.

b)

Police

department wants to host web application that has a real time dashboard which

continuously shows the traffic conditions at several points in the city and

take appropriate action to resolve traffic congestions based on the data feeds

those are coming from your streaming systems.

c)

A

device is fitted into the commercial passenger vehicles which are roaming

around the city. This device has the capability to show the current traffic

conditions on the display and send the current coordinates of the vehicle and

other relevant information back to the streaming system.

Answer:

a) Webhooks

User

registers the interest and application sends back the data to the mobile

application

b) Server

sent events (SSE)

Connection

between dashboard and system will remain open and data is made available to the

client to update the dashboard

c) WebSockets

Enables

the two way communication between the device and streaming system

For each

sub-question, Identification of each technique - 1 mark and adequate

justification 1 mark

Q3. Consider a banking systems

that has 4000 ATMs situated across many places in a particular region. The bank

customer can carry out many transactions like balance check, money withdrawal

and deposit, password reset. These transactions takes 4, 8 and 6 bytes

respectively. Each of this transaction has a timestamp attached to it which

takes 8 bytes. This transaction data needs to be stored at different places

like at ATMs, at an immediate buffer at streaming system side and in a

persistent storage. Narrate the three use-cases which require the data to be

stored at these three places and estimate the sizing required for the same and

suitable option for the storage.

[6]

Answer:

a)

At ATM –

May be just transactions data happened in ATM during last 30 minutes

– if something goes wrong with any transaction like wrong password coming again

and again for same customer, immediately raise an alarm to check that the

person trying out the transaction is really card owner or not, local processing

at ATM needs to be done

In memory databases can be used but sizing will vary from machine to

machine and can be better estimated based on historical transaction data for

that machine

b)

At buffer – immediate trend

analysis wrt money withdrawal patterns and use it for feeding the ATMs with

money most of the times

May be a day’s transactions data needs to be persisted in the buffer

Data flow tiers such as Kafka, Flume can be helpful

For storing processed data, caching systems can be useful

c)

At database – for historical

data analysis , both (a) and (b) will get benefited by this sort of permanent

storage like Databases or data stores

Sizing will depend upon business’s opinion about how recent data

needs to be taken into consideration.

For each correct

identification – 1 mark and explanation 1 mark

Q4. Answer the following

questions in brief:

[2 *3 = 6]

a) How Apache Flume blurs

boundaries between data motion and processing?

Answer:

Interceptors

are where Flume begins to blur line between data motion and processing. Interceptor model allows Flume not only to

modify the metadata headers of an event, but also allows the Event to be

filtered according to those headers.

b) Mention two important ways by

which processed streaming data can be made available to the end users?

Answer:

1) Through dashboards - by sending processed data to the

visualizations placed on dashboards

2) Though alerts/notifications - by sending

important/exceptions updates to the users through alerts or other notification

channels

c)

“Apache Kafka adopts a prescriptive order

for reading and writing operations for a topic”. Justify this statement

mentioning whether it is true or false.

Answer:

True. Apache Kafka is not a queuing system

like ActiveMQ. It does not follow the semantics that messages get processed as

they are arrived. Kafka’s partitioning system does not maintain such structure.

Q5. Consider the following block

diagram of data flow system based on Apache Flume. [1 +4 + 1 =6]

a)

Identify essential components from the

perspective of Flume Agent.

b)

Provide the suitable configuration details

for Apache Flume Agent that matches this data flow scenario.

c)

Which type of data flow is represented

with this block diagram?

Answer:

a)

Three essential components of

Flume Agent

·

Sources – Avro source, Thrift

source and Syslog source for port monitoring data

·

Channels – Memory channel

·

Sink – HDFS sink, ElasticSearch

sink

Identification of sources, channels and sinks – 1 mark

b)

Agent configuration should have mention of three sources,

channel and sinks

myAgent.sources = myAvroSource,

myThriftSource, mySyslogSource

myAgent.channels = myMemoryChannel

myAgent.sinks = myHDFSSink,

myESSink

myAgent.source. myAvroSource.type

= avro

myAgent.source.

myThriftSource.type = thrift

myAgent.source.

mySyslogSource.type = syslogtcp

myAgent.sink. myHDFSSink.type = hdfs

myAgent.sink. myESSink.type = elasticsearch

myAgent.channels.

myMemoryChannel.type =memory

myagent.sources.myAvroSource.channel = myMemoryChannel

myagent.sources. myThriftSource.channel = myMemoryChannel

myagent.sources. mySyslogSource.channel = myMemoryChannel

myagent.sinks. myHDFSSink.channel = myMemoryChannel

myagent. sinks. myESSink.channel = myMemoryChannel

Definition of source, sink and

channel – 0.5 mark

Configuration of source – 1 mark

Configuration of channel – 0.5

mark

Configuration of sink – 1 mark

Mapping between source/sink and

channel – 1 mark

b)

Fan-in flow – from multiple

sources to single channel

********************