All the messages below are just forwarded messages if some one feels hurt about it please add your comments we will remove the post.Host/author is not responsible for these posts

Saturday, April 8, 2023

Cassandra

Thursday, March 9, 2023

INSTALLING HADOOP, HIVE IN WINDOWS & WORKING WITH HDFS -- Ramana (Along with recording)

Friday, March 3, 2023

Install Hive/Derby on top of Hadoop in Windows

Monday, January 9, 2023

BITS-WILP-BDS-Regular 2023-Mid Semester

Q1. Discuss briefly 3 key issues that will impact the

performance of a data parallel application and need careful optimization.

Q2. The CPU

of a movie streaming server has L1 cache reference of 0.5 ns and main memory

reference of 100 ns. The L1 cache hit during peak hours was found to be

23% of the total memory references. [Marks: 4]

- Calculate the

cache hit ratio h.

- Find out the

average time (Tavg) to access the memory.

- If the size of

the cache memory is doubled, what will be the impact on h and Tavg.

- If there is a

total failure of the cache memory, calculate h and Tavg.

Q3. A travel review site stores (user, hotel, review)

tuples in a data store. E.g. tuple is (“user1”, “hotel ABC”, “<review>”).

The data analysis team wants to know which user has written the most reviews

and the hotel that has been reviewed the most. Write MapReduce pseudo-code to

answer this question. [Marks: 4]

Q4. An e-commerce site stores (user, product, rating)

tuples for data analysis. E.g. tuple is (“user1”, “product_x”, 3), where rating

is from 1-10 with 10 being the best. A user can rate many products and products

can be rated by many users. Write MapReduce pseudo-code to find the range (min

and max) of ratings received for each product. So each output record contains

(<product>, <min rating> to <max rating>).

[Marks: 4]

Q5. Name a system and explain how it utilises the

concepts of data and tree parallelism.

[Marks: 3]

Q6. An

enterprise application consists of a 2 node active-active application server

cluster connected to a 2 node active-passive database (DB) cluster. Both tiers

need to be working for the system to be available. Over a long period of time

it has been observed that an application server node fails every 100 days and a

DB server node fails every 50 days. A passive DB node takes 12 hours to

take over from the failed active node. Answer the following questions.

[Marks: 4]

- What is the

overall MTTF of the 2-tier system ?

- Assume only a

single failure at any time, either in the App tier or in the DB tier, and

an equal probability of an App or a DB node failure. What is your estimate

of the availability of the 2-tier system ?

Q7. In the

following application scenarios, point out what is most important - consistency

or availability, when a system failure results in a network partition in the

backend distributed DB. Explain briefly the reason behind your answer.

[Marks: 4]

(a)

A limited quantity discount offer on a product for 100 items at an online

retail store is almost 98% claimed. (b) An online survey application records

inputs from millions of users across the globe.

(c) A travel reservation website is trying to sell rooms at a destination that

is seeing very few bookings.

(d) A multi-player game with virtual avatars and users from all across the

world needs a set of sequential steps between team members to progress across

game milestones.

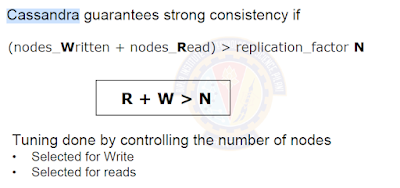

Q8. Assume

that you have a NoSQL database with 3 nodes and a configurable replication

factor (RF). R is the number of replicas that participate to return a Read

request. W is the number of replicas that need to be updated to acknowledge a

Write request. In each of the cases below explain why data is consistent or

in-consistent for read requests.

[Marks: 4]

1.

RF=1, R=1, W=1.

2. RF=2, R=1, W=Majority/Quorum.

3. RF=3, R=2, W=Majority/Quorum.

4. RF=3, R=Majority/Quorum, W=3.

Thursday, January 5, 2023

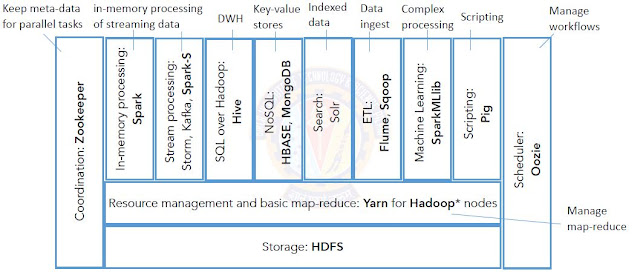

Hadoop 2 - Architecture

MapReduce Programming Architecture and flow

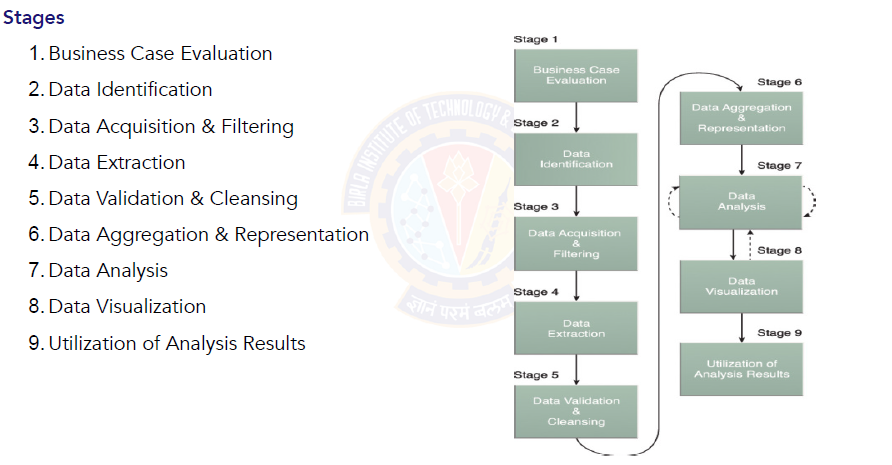

Big Data Analytics Lifecycle

Mean Time Failure and other Formulas

Wednesday, January 4, 2023

Cache performance and Access time of memories

Hit + Miss = Total CPU ReferenceHit Ratio h = Hit / ( Hit + Miss )

Tavg = Average time to access memoryTavg = h * Tc + ( 1-h ) * ( Tm + Tc )