All the messages below are just forwarded messages if some one feels hurt about it please add your comments we will remove the post.Host/author is not responsible for these posts

Monday, January 9, 2023

BITS-WILP-SPA-Regular 2020-Mid Semester

Thursday, January 5, 2023

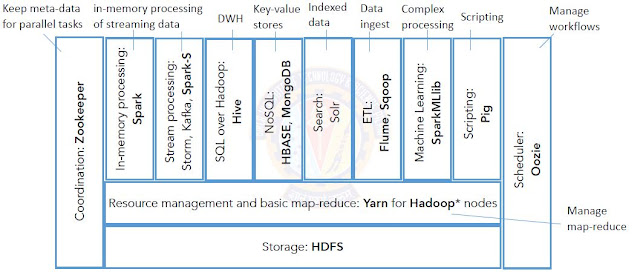

Hadoop 2 - Architecture

MapReduce Programming Architecture and flow

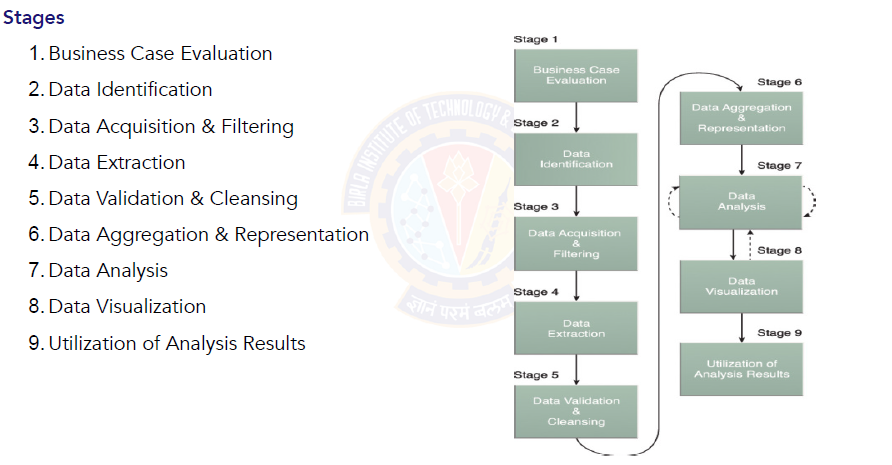

Big Data Analytics Lifecycle

Mean Time Failure and other Formulas

Wednesday, January 4, 2023

Cache performance and Access time of memories

Hit + Miss = Total CPU ReferenceHit Ratio h = Hit / ( Hit + Miss )

Tavg = Average time to access memoryTavg = h * Tc + ( 1-h ) * ( Tm + Tc )

Big Data Architecture Challenges

Apache Technology Ecosystem

Big Data architecture style

Tf-IDf in information Retrieval

The tf-idf (term frequency-inverse document frequency) is a measure of the importance of a word in a document or a collection of documents. It is commonly used in information retrieval and natural language processing tasks.

The formula for calculating tf-idf is:

tf-idf = tf * idf

where:

tf (term frequency) is the frequency of the word in the document. It can be calculated as the number of times the word appears in the document divided by the total number of words in the document.

idf (inverse document frequency) is a measure of the rarity of the word. It can be calculated as the logarithm of the total number of documents divided by the number of documents that contain the word.

The resulting tf-idf score for a word reflects both the importance of the word in the specific document and its rarity in the collection of documents. Words that are common across all documents will have a lower tf-idf score, while words that are specific to a particular document and rare in the collection will have a higher tf-idf score.

----------------------------------

The tf-idf (term frequency-inverse document frequency) is a measure of the importance of a word in a document or a collection of documents. It is commonly used in information retrieval and natural language processing tasks.

The formula for calculating tf-idf is:

tf-idf = tf * idf

where:

tf (term frequency) is the frequency of the word in the document. It can be calculated as the number of times the word appears in the document divided by the total number of words in the document.

idf (inverse document frequency) is a measure of the rarity of the word. It can be calculated as the logarithm of the total number of documents divided by the number of documents that contain the word.

The resulting tf-idf score for a word reflects both the importance of the word in the specific document and its rarity in the collection of documents. Words that are common across all documents will have a lower tf-idf score, while words that are specific to a particular document and rare in the collection will have a higher tf-idf score.

----------------------

Jaccard Coefficient - Information Retrieval

Minimum Edit Distance - Information Retrieval

K-Gram Index (Bigram Indexes) - Information Retrieval

Tuesday, January 3, 2023

Permuterm index - Information Retrieval

Difference between Word/term/token and type

Issues with Information Retrieval

Information Retrieval vs Data Retrieval -- Tabular form

Monday, January 2, 2023

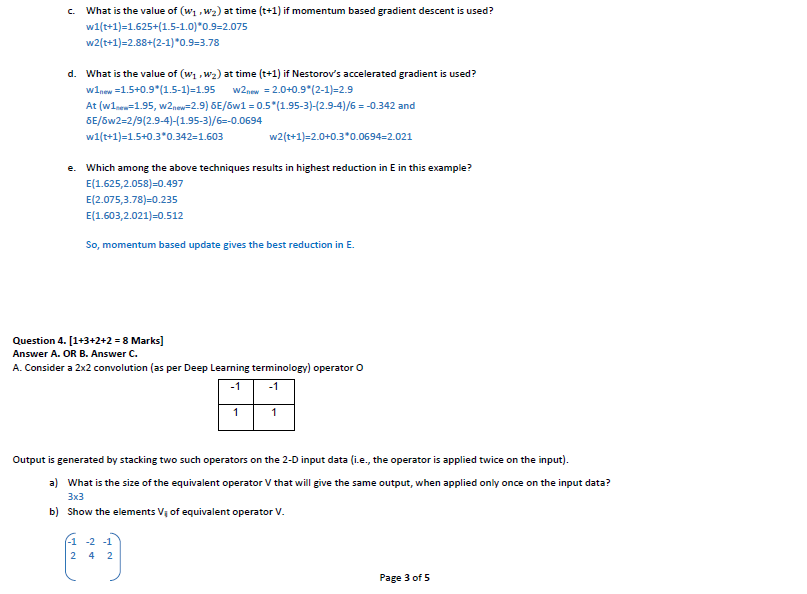

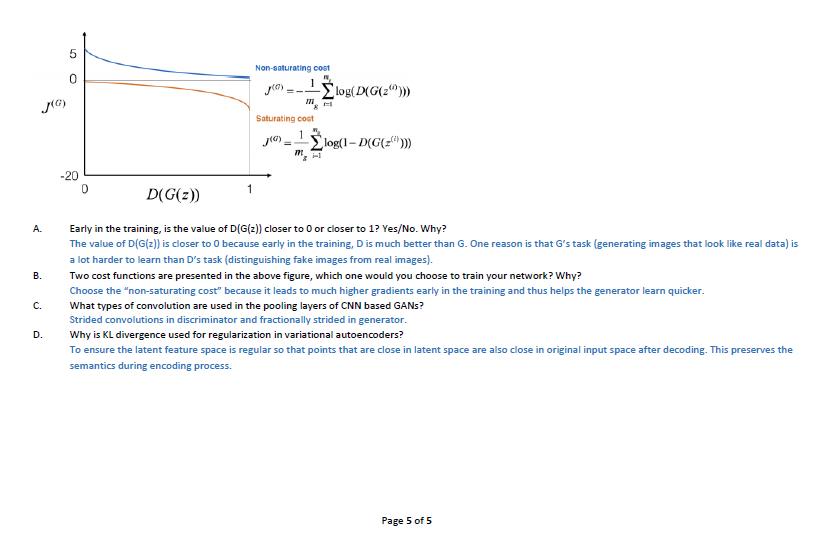

Deep Learning - Mid Semester - Makeup - DSECLZG524

Sunday, January 1, 2023

Information Retrieval -- DSECLZG537 - Mid Semester Question Paper - June 2021

Birla

Institute of Technology & Science, Pilani

Work-Integrated

Learning Programmes Division

June

2021

Mid-Semester

Test

(EC-1

Regular)

|

Course No. : SS ZG537

Course Title : INFORMATION RETRIEVAL

Nature of Exam : Closed Book

Weightage : 30%

Note:

1. Please

follow all the Instructions to Candidates given on the cover page of the

answer book.

2. All

parts of a question should be answered consecutively. Each answer should start

from a fresh page.

3. Assumptions

made if any, should be stated clearly at the beginning of your answer.

Q1

– 2+5+3+5=15 Marks

A) Give an example of uncertainty and

vagueness issues in Information retrieval [2

Marks]

B) Explain the merge algorithm

for the query “Information Retrieval”? What is the best order for query

processing for the query “BITS AND Information AND Retrieval”? What Documents

will be returned as output from the 15 documents? [5 Marks]

Solution:

Merge Algorithm - Intersecting two posting lists : Algorithm

Output document - 11

C)

[3 Marks]

D)

Build inverted index using Blocked

sort-based Indexing for 50 million records. Explain the algorithm in

detail with respect to indexing 50 million records. [5 Marks]

Q2 – 5+5+5=15 Marks

A) Assume

a corpus of 10000 documents. The

following table gives the TF and DF values for the 3 terms in the corpus of

documents. Calculate the logarithmic TF-IDF values. [5

Marks]

|

Term |

Doc1 |

Doc2 |

Doc3 |

|

bits |

15 |

5 |

20 |

|

pilani |

2 |

20 |

0 |

|

mtech |

0 |

20 |

15 |

|

Term |

dft

|

|

bits |

2000 |

|

pilani |

1500 |

|

mtech |

500 |

B)

Classify the test document d6 into c1 or c2 using naïve bayes classifier. The documents

in the training set and the appropriate class label is given below. [5 Marks]

|

|

Docid |

Words in document |

c= c1 |

c= c2 |

|

Training

Set |

d1 |

positive |

Yes |

No |

|

|

d2 |

Very

positive |

Yes |

No |

|

|

d3 |

Positive

very positive |

Yes |

No |

|

|

d4 |

very

negative |

No |

Yes |

|

|

d5 |

negative

|

No |

Yes |

|

Test

Set |

d6 |

Negative

positive very positive |

? |

? |

C)

The search engine ranked results on 0-5 relevance scale: 2, 2, 3, 0, 5.

Calculate the NDCG metric for the same. [5

Marks]