All the messages below are just forwarded messages if some one feels hurt about it please add your comments we will remove the post.Host/author is not responsible for these posts

Friday, December 30, 2022

Tokenization Issues - Information Retrieval

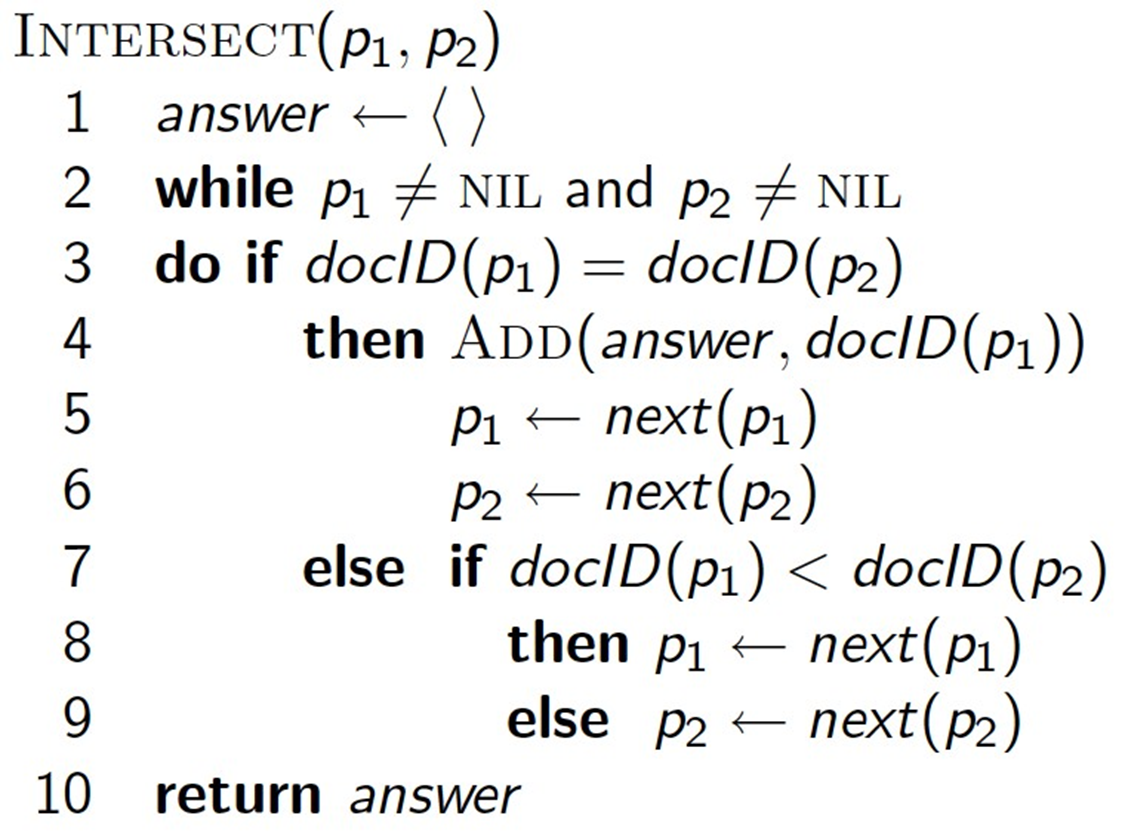

Merge Algorithm - Intersecting two posting lists - Information Retrieval

Wednesday, December 28, 2022

Inverted index construction - Information Retrieval

Tuesday, December 27, 2022

Evaluation Measures - Information Retrieval

Functional View of Paradigm IR System - Information Retrieval

The Process of Retrieving Information -- Information Retrieval

Data Retrieval vs Information Retrieval....

Thursday, September 29, 2022

BITS-WILP-DSECLZG555 - Data Visualization and Interpretation - DVI - Final Question paper - 25092022

BITS-WILP-DSECLZG565 - Machine Learning - ML - Final Question paper - 25092022

BITS-WILP-DSECLZG523 - Introduction to Data Science - IDS - Final Question paper - 18092022

BITS-WILP-DSECLZC413- Introduction to Statistical Methods - ISM - Final Question paper - 18092022

Saturday, September 24, 2022

DSECLZG565- MACHINE LEARNING - Quick Calculators

DSECLZG555-DATA VISUALIZATION AND INTERPRETATION - Story Telling Strategies

DSECLZG555 - DATA VISUALIZATION AND INTERPRETATION - Gestalt Principles of Visual Perception

- Law of Prägnanz (Simplicity)

- Law of Similarity

- Law of Continuity

- Law of Focal Point

- Law of Proximity

- Law of Figure/Ground

- principle of enclosure

- principle of closure

- principle of continuity

- principle of connection

- principle of proximity

- principle of similarity

DSECLZG555 - DATA VISUALIZATION AND INTERPRETATION - Mistakes in Dashboard design

Easy way of converting google colab ipynb to a PDF file

Saturday, September 17, 2022

BITS WILP - DSECLZC413 - Introduction to Statistical Methods - Important calculators

Thursday, July 7, 2022

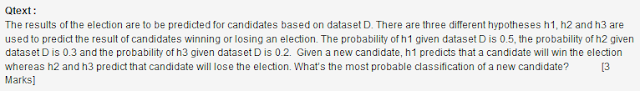

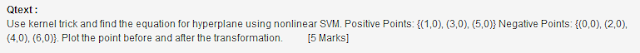

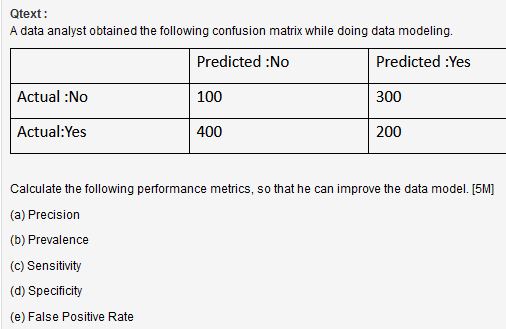

BITS-WILP-Machine Learning - ML - Comprehensive Examination-Regular - 2019-2020

Birla Institute of Technology & Science, Pilani

Work Integrated Learning Programmes Division

Second Semester 2019-20

M.Tech. (Data Science and Engineering)

Comprehensive Examination (Regular)

Course No. : DSECLZG565

Course Title : MACHINE LEARNING

Nature of Exam : Open Book

Weightage : 40%

Duration : 2 Hours

Date of Exam: July 12, 2020 Time of Exam: 10:00 AM – 12:00 PM

Note: Assumptions made if any, should be stated clearly at the beginning of your answer.

Question 1. [3+3+2+3=11 marks]

Suppose you flip a coin with unknown bias θ; P(x = H | θ) = θ, five times and observe the outcome as HHHHH.

What is the maximum likelihood estimator for θ? [1 mark]

Would you think this is a good estimator? If not, why not? [2 marks]

A disease has four symptoms and past history of a physician has the following data. Use Naïve Bayes classifier to predict whether patient has disease for new patient data symptoms. [2 marks]

| Symp1 | Symp2 | Symp3 | Symp4 | Disease |

1 | yes | no | mild | yes | no |

2 | yes | yes | no | no | yes |

3 | yes | no | strong | yes | yes |

4 | no | yes | mild | yes | yes |

5 | no | no | no | no | no |

6 | no | yes | strong | yes | yes |

7 | no | yes | strong | no | no |

8 | yes | yes | mild | yes | yes |

For a new patient | ||||

Symp1 | Symp2 | Symp3 | Symp4 | Disease |

yes | no | mild | yes | ? |

Can logistic regression be applied to multi-class classification problem?

State true or false [1 mark]

Why are log probabilities computed instead of probabilities? [1 mark]

To make computation consistent

To factor into smaller values of probabilities

To factor into larger values of probabilities

None of these

1. In a linear relationship y = m*x+b, y is said to be dependent on x when: [1 mark]

m is closer to zero.

m is far from zero.

b is far from zero.

b is closer to zero.

2. In a linear relationship between y and x, y is not dependent on x when: [1 mark]

The coefficient is closer to zero.

The coefficient is far from zero.

The intercept is far from zero.

The intercept is closer to zero.

3. In a linear regression model y= w0 + w1*x, if true relationship between y and x is

y = 7.5 +3.2x, then w0 acts as, [1 mark]

Intercepts

Coefficients

Estimators

Residuals

Question 2.

The following backpropagation network uses an activation function called leaky ReLU that generates output = input, if input >= 0, and 0.1 * input if output < 0. At a particular iteration, the weights are indicated in the following figure. Training error is given by E = 0.5*(t-y)2 where t is the target output and y is the actual output from the network. What are the outputs of hidden nodes and actual final output y from the network with x1=x2=1? What will be the weights w31 and w12 in the next iteration with learning rate = 0.1, x1=x2=1, and target output t=0? Assume derivative of activation function = 0 at input = 0, and zero bias at all nodes. [1+1+1+1.5+2.5=7 marks]

Question 3.

Consider training a boosting classifier using decision stumps on the following data set:

1. Circle the examples which will have their weights increased at the end of the first iteration? [2 marks]

2. How many iterations will it take to achieve zero training error? Explain. [3 marks]

A new mobile phone service chain store would like to open 20 service centres in Bangalore. Each service centre should cover at least one shopping centre and 5,000 households of annual income over 75,000. Design a scalable algorithm that decides locations of service centres by taking all the aforementioned constraints into consideration [5 marks]

Question 4.

In a clinical trial, height and weight of patients is recorded as shown below in the table. For incoming patient with weight = 58 Kg and Height = 180 cm, classify if patient is Under-weight or Normal using KNN algorithm with When K = 3? [5 marks]

Weight (in Kg) | Height (in cm) | Class |

61 | 190 | Under-weight |

62 | 182 | Normal |

57 | 185 | Under-weight |

51 | 167 | Under-weight |

69 | 176 | Normal |

56 | 174 | Under-weight |

60 | 173 | Normal |

55 | 172 | Normal |

65 | 172 | Normal |

Question 5.

Considering the following data, Let x1, x2 be the features

Positive Points: {(3, 1), (5, 2), (1, 1), (2, 2), (6, -1)}

Negative Points: {(-3, 1), (-2, 2), (0, 3), (-3, 4), (-1, 5)}

Derive an equation of hyperplane and compute the model parameters. [7 marks]